How the ball gets to the viewer faster – sports livestreaming (almost) without delay

Every year, a very technical term crops up in the coverage of major sporting events that has few friends and is considered the number one mood killer for any live audience: latency.

This refers to the delays affecting the different TV reception channels, which do not all receive their signals in parallel. Depending on different transmission channels – such as antenna (DVB-T2), digital cable or streaming services on the internet – various technical factors come into play that cause disparity in goal celebrations. The term “live transmission” is, therefore, open to interpretation.

Until recently, the internet – with its streaming services, apps and pay-per-view offers – was considered the slowest channel; when compared to satellite signals, there was talk of delays of up to several minutes. At the time, Heise reported on the 2014 World Cup: “Those who use internet services to stay on the ball usually still have plenty of time to pull out their smartphone, start the app and then watch the goal “live” that the others have already cheered for." (german article)

But: online is growing and television is increasingly shifting to the internet. The technology is constantly being developed and the minimising of latency is not just our day-to-day business as a streaming service provider, but rather a genuine passion.We are delighted when our know-how is successfully used in one or the other live offering for major sporting events.

We will be happy to provide concrete examples and references on request. Initial measurements on the occasion of sporting events in the summer of 2018 showed latency optimisations from what was previously 60 seconds, to 5 seconds when compared to a satellite signal (DVB-S2).

What technology is used?

To optimise the latencies, we essentially focused on shortening the segments. For HTTP live streaming, the media content is divided into small individual videos of a certain length (segmented). These segments are delivered via HTTP and played back on the user’s end device (player) as an uninterrupted stream.

The delivery of a live stream usually also depends on the current connection conditions of the end user, i.e. the optimal quality (highest possible bit rate) is provided. The image quality of the streams adapts to the reception conditions of the end user. If the end device is connected via WiFi, the sharpest images with the highest quality are provided. If the user is sitting in a train, for example, with low reception quality, the data rate is throttled and the quality is reduced.

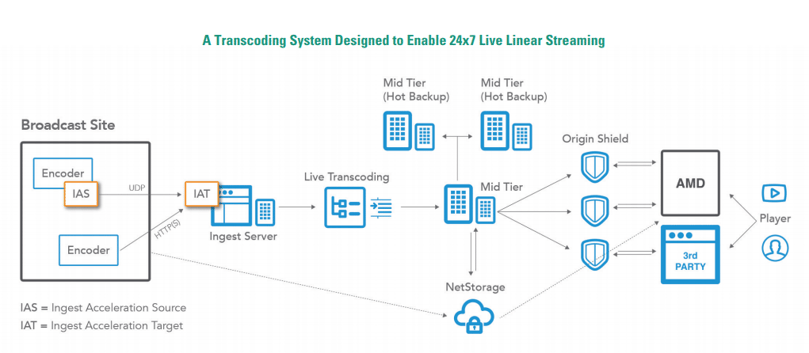

The spread of HTTP streams with short segment lengths, however, brings with it a massive increase in requests to the delivering servers – and requires a high-performance platform to ensure a smooth operation, which the colleagues from Akamai provide us with thanks to their "Media Services Live 4".

On the subject of latency, here are some exciting resources from our technology partners:

- https://developer.akamai.com/edge-2017/videos/the-road-to-ultra-low-latency

The Road to Ultra Low Latency: Delivering “better than broadcast” live streams presents a number of technical challenges, and achieving ultra-low latency is certainly near the top of the list. But “challenging” doesn’t mean impossible—and Akamai can help. Join this session to learn how you can leverage the latest features of the Common Media Application Format (CMAF) with Akamai Media Services Live to create live streams with ultra-low latency for amazing video experiences. - https://www.wowza.com/blog/what-is-low-latency-and-who-needs-it

What Is Low Latency? So, if several seconds of latency is normal, what is low latency? When it comes to streaming, low latency describes a glass-to-glass delay of five seconds or less. That said, it’s a subjective term. The popular Apple HLS streaming protocol defaults to approximately 30 seconds of latency (more on this below), while traditional cable and satellite broadcasts are viewed with about a five-second delay behind the live event. - https://aws.amazon.com/de/blogs/media/how-to-compete-with-broadcast-latency-using-current-adaptive-bitrate-technologies-part-1/

Why is latency a problem for live video streaming? Whenever content delivery is time sensitive, whether it be TV content like sports, games, or news or pure OTT content such as eSports or gambling, you cannot afford a delay. Waiting kills the suspense; waiting turns you into a second-class citizen in the world of entertainment and real-time information. An obvious example is watching a soccer game: Your neighbor watches it on traditional TV and shouts through the walls on each goal of his favorite team, usually the same as yours, while you have to wait 25 or 30 seconds before you can see the same goal with your OTT service. This is highly frustrating, and similar to when your favorite singing contest results are spoiled by a Twitter or Facebook feed that you monitor alongside the streaming channel. Those social feeds are usually generated by users watching the show on their own TV, so your comparative latency usually goes down to 15 to 20 seconds, but you are still significantly behind live TV.